备注:ks 为 sudo kubectl的别名

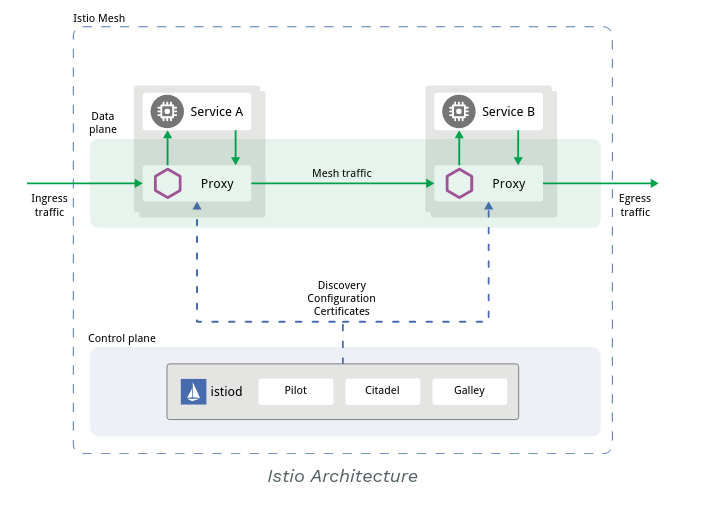

架构图

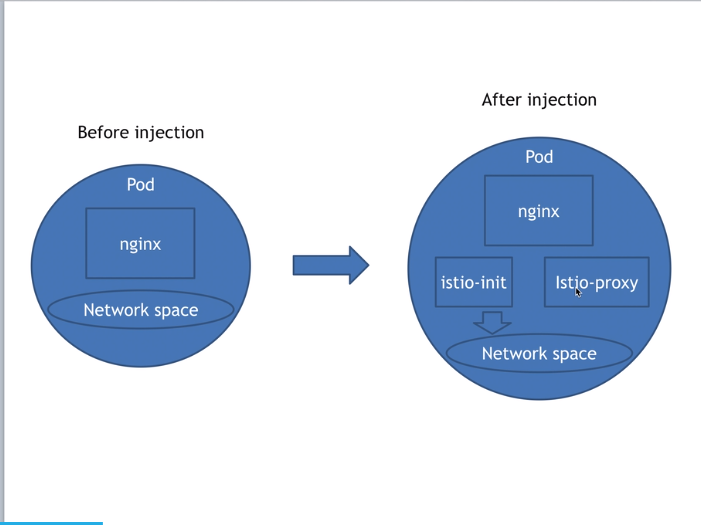

新建一个pods进行注入操作

apiVersion: apps/v1

kind: Deployment

metadata:

name: jiuxi

labels:

app: jiuxi

spec:

replicas: 1

selector:

matchLabels:

app: jiuxi

template:

metadata:

labels:

app: jiuxi

spec:

containers:

- name: nginx

image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

我们创建新的一个命名空间,并且生成部署

ks create ns jiuxi-ns

ks create -f jiuxi.yaml -n jiuxi-ns

我们可以观察到pods中只有一个镜像(READY)

$ ks get -n jiuxi-ns pods

NAME READY STATUS RESTARTS AGE

jiuxi-ff6496997-n7jrk 1/1 Running 0 3m55s

这个pods是没有进行istio注入的

手动生成注入yaml

生成并且应用注入,我们可以知道apply过程中是生成一个新的,然后在将原来的删除进行替换

$sudo istioctl kube-inject -f jiuxi.yaml > jiuxi-inject.yaml

$ks apply -f jiuxi-inject.yaml -n jiuxi-ns

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

deployment.apps/jiuxi configured

$ks get -n jiuxi-ns pods

NAME READY STATUS RESTARTS AGE

jiuxi-5479899b95-fvtsb 0/2 PodInitializing 0 21s

jiuxi-ff6496997-n7jrk 1/1 Running 0 10m

$ks get -n jiuxi-ns pods

NAME READY STATUS RESTARTS AGE

jiuxi-5479899b95-fvtsb 2/2 Running 0 32s

生成如下的文件

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: jiuxi

name: jiuxi

spec:

replicas: 1

selector:

matchLabels:

app: jiuxi

strategy: {}

template:

metadata:

annotations:

sidecar.istio.io/interceptionMode: REDIRECT

sidecar.istio.io/status: '{"version":"ed4e6e8ed4ffa03fe7d5b9d2c27cc8c478625ade64be2859cae3da0db9e5ee2e","initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istiod-ca-cert"],"imagePullSecrets":null}'

traffic.sidecar.istio.io/excludeInboundPorts: "15020"

traffic.sidecar.istio.io/includeInboundPorts: "80"

traffic.sidecar.istio.io/includeOutboundIPRanges: '*'

creationTimestamp: null

labels:

app: jiuxi

istio.io/rev: ""

security.istio.io/tlsMode: istio

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80

resources: {}

- args:

- proxy

- sidecar

- --domain

- $(POD_NAMESPACE).svc.cluster.local

- --serviceCluster

- jiuxi.$(POD_NAMESPACE)

- --proxyLogLevel=warning

- --proxyComponentLogLevel=misc:error

- --trust-domain=cluster.local

- --concurrency

- "2"

env:

- name: JWT_POLICY

value: first-party-jwt

- name: PILOT_CERT_PROVIDER

value: istiod

- name: CA_ADDR

value: istiod.istio-system.svc:15012

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: INSTANCE_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: CANONICAL_SERVICE

valueFrom:

fieldRef:

fieldPath: metadata.labels['service.istio.io/canonical-name']

- name: CANONICAL_REVISION

valueFrom:

fieldRef:

fieldPath: metadata.labels['service.istio.io/canonical-revision']

- name: PROXY_CONFIG

value: |

{"proxyMetadata":{"DNS_AGENT":""}}

- name: ISTIO_META_POD_PORTS

value: |-

[

{"containerPort":80}

]

- name: ISTIO_META_APP_CONTAINERS

value: |-

[

nginx

]

- name: ISTIO_META_CLUSTER_ID

value: Kubernetes

- name: ISTIO_META_INTERCEPTION_MODE

value: REDIRECT

- name: ISTIO_META_WORKLOAD_NAME

value: jiuxi

- name: ISTIO_META_OWNER

value: kubernetes://apis/apps/v1/namespaces/default/deployments/jiuxi

- name: ISTIO_META_MESH_ID

value: cluster.local

- name: DNS_AGENT

- name: ISTIO_KUBE_APP_PROBERS

value: '{}'

image: docker.io/istio/proxyv2:1.6.5

imagePullPolicy: Always

name: istio-proxy

ports:

- containerPort: 15090

name: http-envoy-prom

protocol: TCP

readinessProbe:

failureThreshold: 30

httpGet:

path: /healthz/ready

port: 15021

initialDelaySeconds: 1

periodSeconds: 2

resources:

limits:

cpu: "2"

memory: 1Gi

requests:

cpu: 10m

memory: 40Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

runAsGroup: 1337

runAsNonRoot: true

runAsUser: 1337

volumeMounts:

- mountPath: /var/run/secrets/istio

name: istiod-ca-cert

- mountPath: /var/lib/istio/data

name: istio-data

- mountPath: /etc/istio/proxy

name: istio-envoy

- mountPath: /etc/istio/pod

name: istio-podinfo

initContainers:

- args:

- istio-iptables

- -p

- "15001"

- -z

- "15006"

- -u

- "1337"

- -m

- REDIRECT

- -i

- '*'

- -x

- ""

- -b

- '*'

- -d

- 15090,15021,15020

env:

- name: DNS_AGENT

image: docker.io/istio/proxyv2:1.6.5

imagePullPolicy: Always

name: istio-init

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 10m

memory: 10Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_ADMIN

- NET_RAW

drop:

- ALL

privileged: false

readOnlyRootFilesystem: false

runAsGroup: 0

runAsNonRoot: false

runAsUser: 0

securityContext:

fsGroup: 1337

volumes:

- emptyDir:

medium: Memory

name: istio-envoy

- emptyDir: {}

name: istio-data

- downwardAPI:

items:

- fieldRef:

fieldPath: metadata.labels

path: labels

- fieldRef:

fieldPath: metadata.annotations

path: annotations

name: istio-podinfo

- configMap:

name: istio-ca-root-cert

name: istiod-ca-cert

status: {}

---

从配置文件中我们可以看到几个镜像

nginx:1.14-alpine

docker.io/istio/proxyv2:1.6.5

initContainers【istio-iptables】 //这个是一个初始化的容器,执行完就结束了,用于注册network space

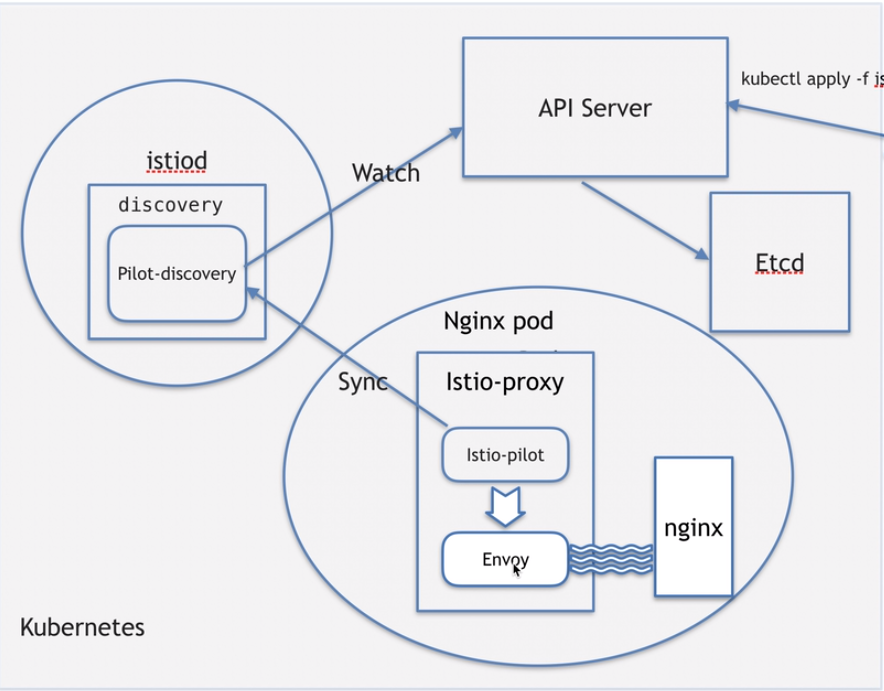

我们可以观察一下istio-proxy容器的运行进程

$ ~ ks exec -it -n jiuxi-ns jiuxi-5479899b95-fvtsb -c istio-proxy bash // 进入容器

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

$ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

istio-p+ 1 0.1 0.3 760748 48940 ? Ssl 01:08 0:00 /usr/local/bin/pilot-agent proxy sidecar --domain j

istio-p+ 17 0.4 0.3 177808 54240 ? Sl 01:08 0:02 /usr/local/bin/envoy -c etc/istio/proxy/envoy-rev0.

istio-p+ 29 0.0 0.0 18512 3420 pts/0 Ss 01:17 0:00 bash

istio-p+ 38 0.0 0.0 34408 2780 pts/0 R+ 01:17 0:00 ps aux

我们可以看到 pilot-agent 以及 envoy 这两个进程

| 进程 | 作用 |

|---|---|

| pilot-agent | 监控envoy的进程是否正常,然后报告给pilot-discovery |

| envoy | 轻量级的代理 |

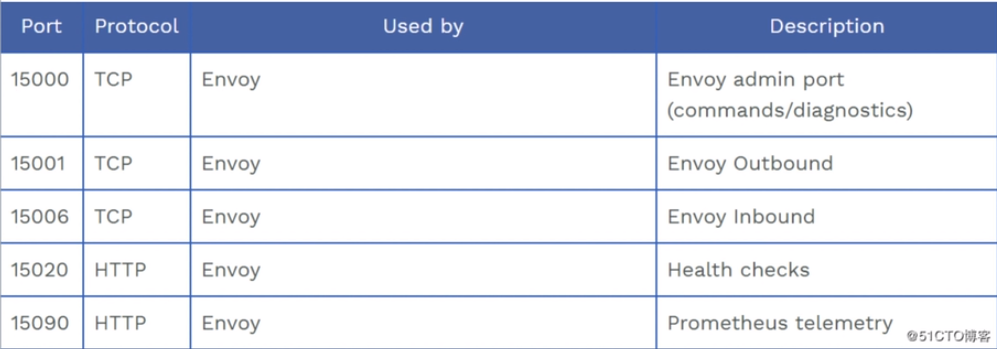

注入后网络变化

注入前

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1/nginx: master pro

注入后

tcp 0 0 0.0.0.0:15021 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1/nginx: master pro

tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:15000 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:15001 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:15006 0.0.0.0:* LISTEN -

tcp 0 0 :::15020 :::* LISTEN -

这个变化是Inicontainer处理的,我们可以看一下istio-init到底做了什么

➜ ~ ks logs -f -n jiuxi-ns jiuxi-5479899b95-fvtsb -c istio-init

Environment:

------------

ENVOY_PORT=

INBOUND_CAPTURE_PORT=

ISTIO_INBOUND_INTERCEPTION_MODE=

ISTIO_INBOUND_TPROXY_MARK=

ISTIO_INBOUND_TPROXY_ROUTE_TABLE=

ISTIO_INBOUND_PORTS=

ISTIO_LOCAL_EXCLUDE_PORTS=

ISTIO_SERVICE_CIDR=

ISTIO_SERVICE_EXCLUDE_CIDR=

Variables:

----------

PROXY_PORT=15001

PROXY_INBOUND_CAPTURE_PORT=15006

PROXY_UID=1337

PROXY_GID=1337

INBOUND_INTERCEPTION_MODE=REDIRECT

INBOUND_TPROXY_MARK=1337

INBOUND_TPROXY_ROUTE_TABLE=133

INBOUND_PORTS_INCLUDE=*

INBOUND_PORTS_EXCLUDE=15090,15021,15020

OUTBOUND_IP_RANGES_INCLUDE=*

OUTBOUND_IP_RANGES_EXCLUDE=

OUTBOUND_PORTS_EXCLUDE=

KUBEVIRT_INTERFACES=

ENABLE_INBOUND_IPV6=false

Writing following contents to rules file: /tmp/iptables-rules-1597280892232020591.txt745629639

// 我们可以看到这里使用nat对iptables设置了 ISTIO_REDIRECT ISTIO_IN_REDIRECT ISTIO_INBOUND ISTIO_OUTPUT链

* nat

-N ISTIO_REDIRECT

-N ISTIO_IN_REDIRECT

-N ISTIO_INBOUND

-N ISTIO_OUTPUT

-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001

-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006

-A PREROUTING -p tcp -j ISTIO_INBOUND

-A ISTIO_INBOUND -p tcp --dport 22 -j RETURN

-A ISTIO_INBOUND -p tcp --dport 15090 -j RETURN

-A ISTIO_INBOUND -p tcp --dport 15021 -j RETURN

-A ISTIO_INBOUND -p tcp --dport 15020 -j RETURN

-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT

-A OUTPUT -p tcp -j ISTIO_OUTPUT

-A ISTIO_OUTPUT -o lo -s 127.0.0.6/32 -j RETURN

-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT

-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT

-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN

-A ISTIO_OUTPUT -j ISTIO_REDIRECT

COMMIT

iptables-restore --noflush /tmp/iptables-rules-1597280892232020591.txt745629639

Writing following contents to rules file: /tmp/ip6tables-rules-1597280892337693438.txt863269498

ip6tables-restore --noflush /tmp/ip6tables-rules-1597280892337693438.txt863269498

iptables-save

# Generated by iptables-save v1.6.1 on Thu Aug 13 01:08:12 2020

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:ISTIO_INBOUND - [0:0]

:ISTIO_IN_REDIRECT - [0:0]

:ISTIO_OUTPUT - [0:0]

:ISTIO_REDIRECT - [0:0]

-A PREROUTING -p tcp -j ISTIO_INBOUND

-A OUTPUT -p tcp -j ISTIO_OUTPUT

-A ISTIO_INBOUND -p tcp -m tcp --dport 22 -j RETURN

-A ISTIO_INBOUND -p tcp -m tcp --dport 15090 -j RETURN

-A ISTIO_INBOUND -p tcp -m tcp --dport 15021 -j RETURN

-A ISTIO_INBOUND -p tcp -m tcp --dport 15020 -j RETURN

-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT

-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006

-A ISTIO_OUTPUT -s 127.0.0.6/32 -o lo -j RETURN

-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT

-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN

-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT

-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN

-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN

-A ISTIO_OUTPUT -j ISTIO_REDIRECT

-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001

COMMIT

# Completed on Thu Aug 13 01:08:12 2020

这个是多出来的几个端口的作用

自动注入

如果需要自动注入的话我们只需要在明明空间中打上一个标签,istio就能自动进行注入操作

$ ks label ns jiuxi-ns istio-injiection=enabled

后续创建的pods都能自动进行注入操作

教程来源

https://www.bilibili.com/video/BV1vE411p7wX?p=4